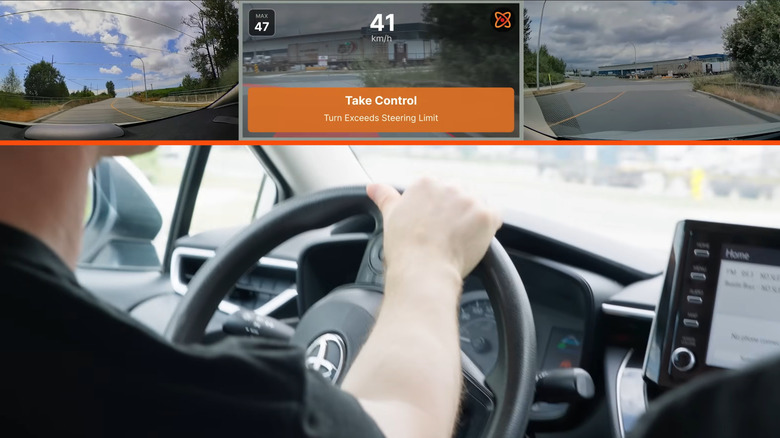

There’s been a lot of buzz around aftermarket advanced driving assistance systems lately. Whether it’s a hacker-celebrity showing off their DIY ride on CNET or a couple taking their old Prius on a hands-free cross-country road trip, it seems like everybody’s trying to get in on the action. But let’s get one thing straight: this is NOT real self-driving. Not even close.

What we’re really talking about here are SAE Level 2 systems. When you’re behind the wheel, it’s still all on you, legally and morally. Your hands need to be ready, and your eyes glued to the road—bottom line.

The situation starts to get a bit shady because these gadgets play around in a grey area of the law. This opens up potential legal and financial headaches for users, making things more intricate than they might appear at first glance. Like Icarus, those heading too close to the sun could end up in trouble—let’s dig in deeper.

Hotwiring Your Car’s Brakes Isn’t a Good Idea

So how do these aftermarket systems even work? Usually, they attach a box packed with cameras and screens to your windshield with some extra sticky tape. After a bit of wiring fiddling—depending on the car—these devices latch onto your car’s CAN bus, or central nervous system. They eavesdrop on various sensors, factor in their own cameras, and relay altered commands to your steering, throttle, and brakes.

Let’s be real here—that typical car enthusiast you know may not exactly be signing up for this. It’s more like the friend who can’t decide what car to get and went for a Wrangler. Yet, there are Youtube tutorials for these devices—what could possibly go wrong?

If you’re willing to take the risk, let’s explore some tech specifics. You’d think these gadgets would utilize the latest technology, right? Not quite—they’re mainly running on processors that were last seen in a Samsung Galaxy S9 from 2018. We’re talking about outdated tech trying to operate on an array of different hardware configurations. It’s the worst kind of one-size-fits-all scenario applying now to steering and braking systems.

Trust the System—Or Not

When car manufacturers develop such advanced features, they’re bound by strict regulations like ISO 26262 for safety testing. These rules comprise a lengthy checklist of procedures for certification. But for these aftermarket folks? They can just say they’re ‘following the guidelines.’ That’s basically lawyer-speak for firing themselves from actual accountability. Instead of having test tracks, their labs are our public roads. Their real tests happen while you’re out there—every mile counts, every awkward mishap is part of the process.

There are ongoing discussions on Reddit about accidents involving these systems—some users openly admitting to cops they were using these aftermarket gadgets while others hide behind usernames. Can you imagine trying to file an insurance claim in a no-fault state like New York, for a crash caused by someone adding a few cameras to their windshield? Yes, poor choices are part of life, but making those choices for innocent parties is another issue altogether. Faster than you can say ‘bad idea,’ reports come in of users being denied claims, or insurers opting not to cover them again.

When an Upgrade Costs More than You Think

Rewind back to 2016 when the NHTSA tried to put Comma on the hot seat, asking them to prove their products were safe. Instead, they pivoted, dropping the product altogether. Red flag! They repositioned themselves to describe their tech as research software instead. Now that’s a sneaky legal trick that basically shifts responsibility onto you.

Now let’s examine those Terms of Service—because they are packed with landmines:

First up, there’s a clear **warning**: “THIS IS ALPHA QUALITY SOFTWARE FOR RESEARCH PURPOSES ONLY. NOT A PRODUCT.” Imagine this as a ‘get-out-of-jail-free’ card if something goes south. If anything crashes and burns, they’re off the hook for providing a defective product; you were just using ‘research tools’ improperly.

To hit you with the real kicker, check out the indemnification clause—if someone gets hurt in a crash with Comma’s tech involved, you might be on the hook for covering their legal fees and court-imposed payouts.

Sure, every Level 2 system gives legal responsibility to the driver. But why mention this? Because reputable OEMs go through rigorous standards before their systems are tossed into consumer hands. A peek at articles about “Tesla Autopilot crash” reveals this can affect any system. But how come aftermarket systems, claiming to offer similar capabilities, aren’t under the same scrutiny?

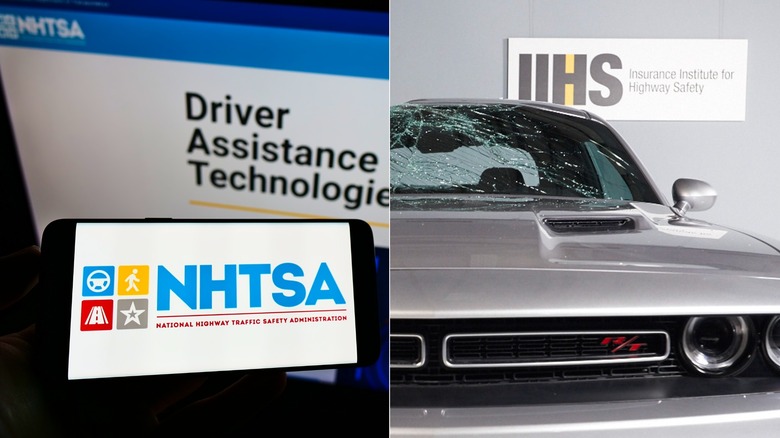

Time for Some Real Oversight

We reached out to the experts for clarity on the issues:

The NHTSA claims they’re “subject to defect authority” so any company with unsafe gear must initiate a recall. That sounds confident, except for the Make Inoperative clause, which states it doesn’t apply to individuals. A loophole like that is essentially an open door.

The folks over at the Insurance Institute for Highway Safety highlighted that these gadgets are merely “driver convenience systems”—not legit safety systems. Moreover, they mentioned no plans exist to formally evaluate any aftermarket system that claims to take active control. Their evaluations of basic alert systems show that such gadgets underperform compared to factory-installed ones.

Surely, we reached out to SEMA, the organization backing aftermarket modifications, expecting a safety validation roadmap? Pretty much, we got the response that there are zero actionable guidelines in place. And regarding those bothered researchers—let’s just say they stressed importance in “clear communication.”

After trying to get a word with Comma.ai through their support email, you get a bounce-back saying they don’t engage in email replies. Instead, we’re encouraged to file a ticket—except none of their forms allow for direct inquiries.

In short, we have a “convenience system” the IIHS won’t test, that SEMA admits lacks safety standards, with NHTSA able to step in but not doing so due to loophole shenanigans.

Innovation? Absolutely. But remember, we’re not discussing a novel app for ordering tacos. We’re talking about unpolished alpha code running on outdated hardware, sending spoofed signals to your car’s steering while you cruise through public streets, possibly around your loved ones.