Back in 385 B.C.E., the philosopher Plato wrote about Socrates challenging one of his students with the perplexing “doubling the square” problem. When the student was asked to increase the area of a square, they simply doubled the length of its sides without realizing that the new square should actually be defined by the diagonal of the original square.

A team from the University of Cambridge and the Hebrew University in Jerusalem took interest in this historical puzzle and decided to see howChatGPT, a prominent AI model, would respond to it. The reason for their choice? The problem has a solution that’s not immediately obvious. Over the last 2,400 years, the debate has continued: does the mathematical insight come from within us, revealed through reasoning, or is it something we only gain through hands-on experience?

Since ChatGPT is primarily fed with text and not images, the researchers expected that the answer to this challenge might not be found in its training data. If ChatGPT managed to come up with the right solution on its own, it could suggest that our ability to do math is more about learning than something inherently natural.

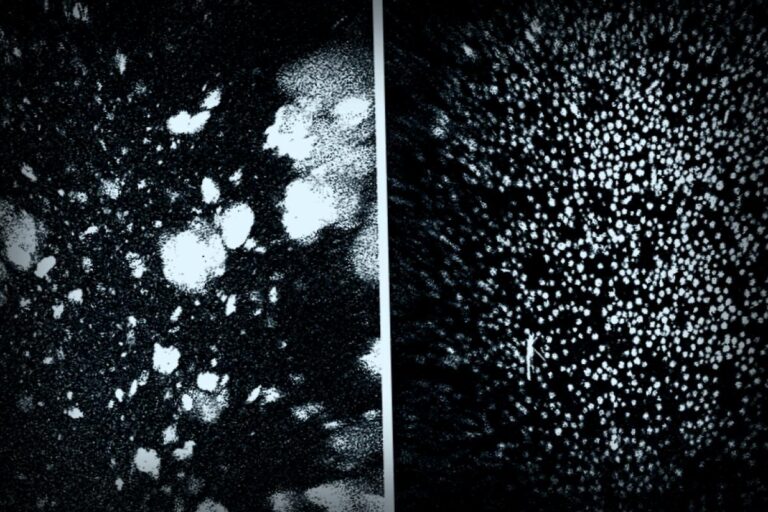

The researchers pushed further and published their findings in a study on September 17 in the International Journal of Mathematical Education in Science and Technology. They asked ChatGPT to apply a comparable principal by attempting to double the area of a rectangle. ChatGPT responded by stating that there was no solution in geometry because one cannot use a rectangle’s diagonal in that manner.

However, Nadav Marco, a visiting scholar at the University of Cambridge who is from the Hebrew University, and Andreas Stylianides, a mathematics education professor, recognized that a geometric solution did exist.

Marco mentioned that the odds of ChatGPT’s incorrect claim being found in its data were incredibly low. This pointed to the fact that the AI was creating responses based on discussions it had previously encountered regarding the doubling the square issue, illustrating a form of learning rather than relying on innate ability.

He articulated, “When faced with a new challenge, our instinct often drives us to test ideas based on our prior experiences.” He shared this insight in a statement on September 18, suggesting that during their experiment, ChatGPT seemed to mimic baseline learning strategies, generating its own hypotheses and potential solutions.

Do Machines Think Like Us?

The research provides fresh perspectives on artificial intelligence and its capacity for “reasoning” and “thinking.” Particularly since ChatGPT appeared to improvise its responses and even made mistakes similar to acknowledgements from Socrates’ student, Marco and Stylianides proposed that ChatGPT could be utilizing a familiar educational concept called the zone of proximal development (ZPD). This concept defines the variance between what a person understands and what they can grasp with proper educational support.

According to the researchers, ChatGPT might be spontaneously adopting a similar framework to arrive at solutions for unfamiliar problems, all cultivated by the right prompts offered to it.

This scenario unveils the vintage black box issue within AI, which highlights that the internal programming and reasoning pathways that reach conclusions remain hidden. However, researchers assert that this work emphasizes the potential to enhance AI’s efficacy.

Stylianides pointed out a significant distinction: unlike proofs found in reliable textbooks, strict standards can’t yet be assumed for proofs generated by ChatGPT. He emphasized that the ability to comprehend and evaluate AI-based proofs is emerging as a vital skill that should be incorporated into mathematics curricula going forward.

They argue for enhancements in executing these tasks effectively in educational environments, suggesting prompts like “let’s explore this problem together” instead of “just give me the answer.”

The researchers appeal for caution in interpreting the outcomes. They urge against hastily concluding that large language models like ChatGPT mirror our cognitive process. Yet, Marco did refer to the AI’s actions as “learner-like.”

Looking ahead, the team sees plenty of opportunities for additional research. They recognize that newer models can be evaluated against a wider array of mathematical complexities, and there’s also the possibility to merge ChatGPT with dynamic geometry systems or theorem provers. This could pave the way for enriched digital environments that enhance collaborative learning for teachers and students alike.