So, here we go again. OpenAI has made a significant announcement that’s making waves—it’s like we’re caught between ‘finally taking action’ and ‘wait, doesn’t this invade our privacy?’

As reported by Futurism, the tech giant is now checking out your ChatGPT conversations for stuff that might be harmful. And hey, if they think you might hurt someone, they won’t hesitate to alert the cops.

This isn’t some fictional nightmare scenario.

After a year filled with disastrous outcomes involving AI—think of chatbots prompting teens towards suicide, people ending up hospitalized due to bizarre behaviors linked to AI, and even at least one suicide connected to an OpenAI therapy bot—the company seems to have realized it’s time to, well, monitor this ticking time bomb.

So, here’s the breakdown of their new system: So-called harmful messages get flagged by AI algorithms, then human reviewers take a gander. Depending on their judgment, they can either ban users or notify the authorities if someone is seen as a threat.

Interestingly enough, OpenAI is not doing much when it comes to self-harm situations to, as they say, keep things private—a move that seems more legal than moral.

But here’s where the irony lies: OpenAI is currently battling it out against The New York Times and similar publications, who are asking for access to user discussions for their copyright cases.

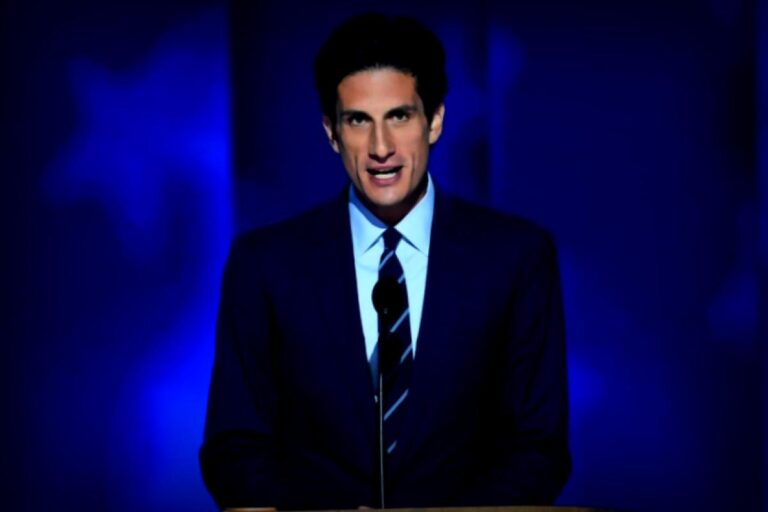

Their stance? User privacy is the top priority. CEO Sam Altman even cautioned that conversations on ChatGPT aren’t legally protected and can end up being produced in a court if needed.

So, to recap the intriguing paradox here: OpenAI won’t spill your chats to media houses aiming to safeguard their intellectual property, but they will share them with the police if their systems flag you as suspicious.

All of this brings up their claim about protecting privacy, even as they admit your chats were never truly private to begin with. At least they’re finally recognizing that their AI could potentially be turning people into digital shells.

What’s your take on this—does OpenAI’s surveillance approach go too far, or is it something we needed? Are you more concerned about privacy or somewhat relieved that they’re taking on some responsibility finally?