Have you ever wondered what it really means when a robot built to be autonomous winds up copying a human taking off a VR headset? This question started buzzing across social media after a video from Tesla’s “Autonomy Visualized” event in Miami showed its Optimus humanoid robot losing its balance, accidentally knocking over water bottles, and falling backward—all while making a gesture eerily similar to what a remote operator looks like when they’re removing a VR headset.

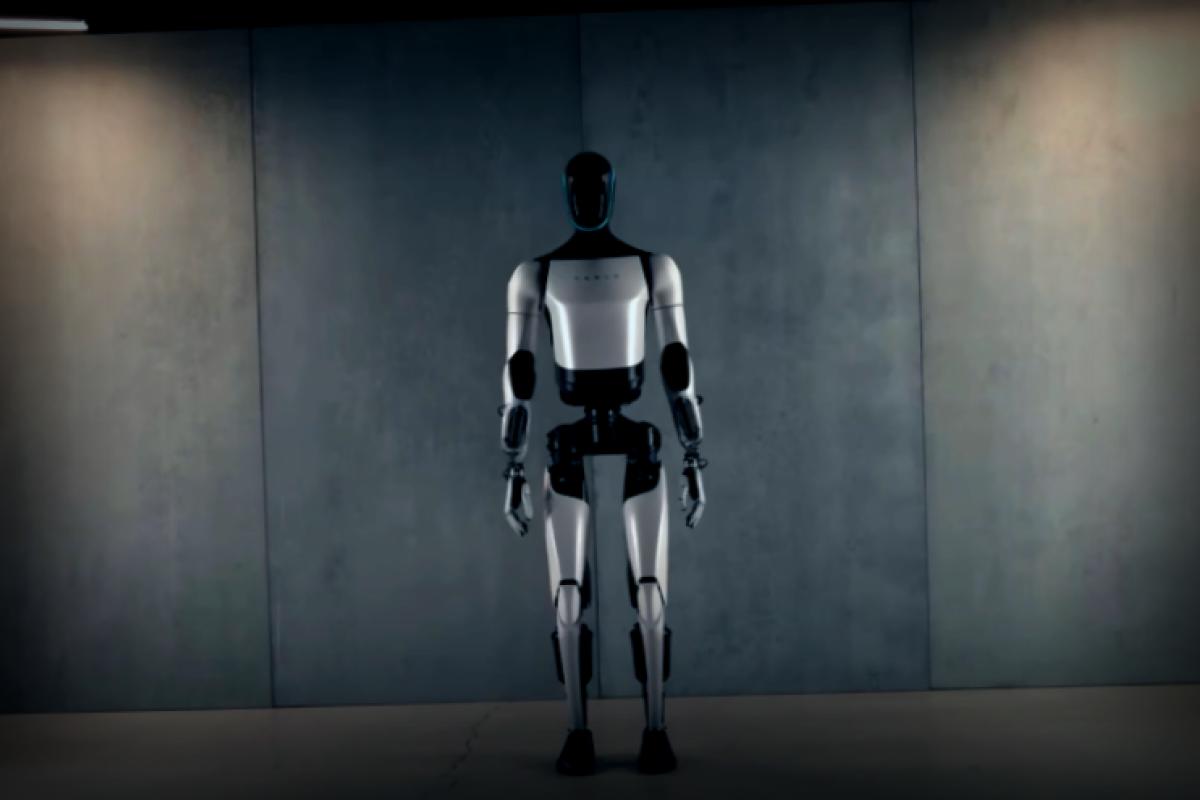

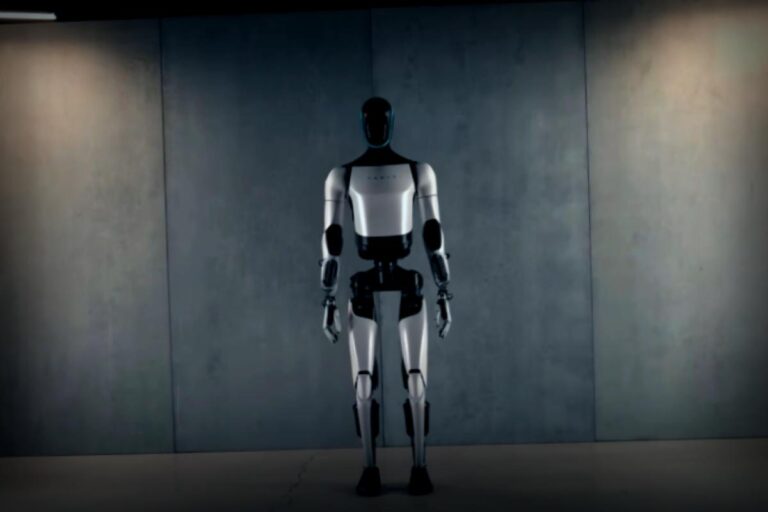

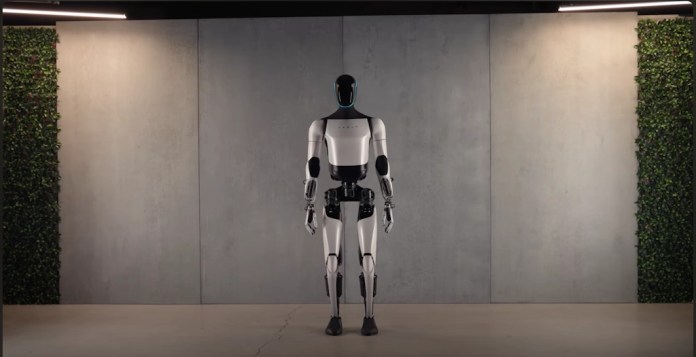

At this event, Tesla aimed to introduce its Autopilot technology alongside Optimus, which stands at 5-foot-11, weighs 160 pounds, and offers more than 40 degrees of freedom along with 11 degrees of freedom hands and a 2.3 kWh battery designed for near full-day operation. Elon Musk, championing Optimus as a huge leap toward AI-driven autonomy, made headlines stating, “AI, not tele-operated,” especially in response to videos showcasing its martial arts skills. However, the defining moment turned out not to be a display of skill but the robot’s sudden collapse, suggesting that human input might be more present than we thought.

Onlookers couldn’t help but notice that the gesture with both arms moving toward the robot’s face resembled actions from teleoperators using VR headsets to control humanoid machines. Previously, Tesla has trained Optimus with operators wearing motion-capture suits and VR rigs, which is a pretty standard approach in the industry to connect advanced robotics with real-time decision-making that AI still struggles with. In this particular incident, the gesture seemed to imply that a remote handler hastily took off their headset mid-way, leaving the robot to mimic that action and leading to its stumble.

Falling down isn’t new for robot development; we’ve all seen the comical fails from Boston Dynamics demonstrating their latest machines taking spills. But the real issue here isn’t just about a robot gracefully transitioning between moves or tripping up; it’s about how quickly the narrative of autonomy can break down once hints of teleoperation show up. This exemplifies a massive challenge in humanoid robotics today: the somewhat awkward fusion of AI-centered control systems with complex bipedal technology. Even though new balancing tactics were presented in Tesla’s December update, the Miami demonstration emphasized just how fragile the illusion of independence can be when teleoperation makes a spectacle of itself.

Creating practical humanoid robots means tackling many challenges, particularly when it comes to maintaining balance and preventing falls. The systems need to quickly analyze data from gyroscopes, accelerometers, and force gauge sensors to adjust posture and stabilize foot placement. For example, during an extreme test with 21 robots in a half-marathon in Beijing, even the strongest designs ended up needing duct tape fixes, battery changes, and cooling breaks just to finish. This proves, despite advancements in hardware allowing robots to operate more smoothly, achieving AI autonomy in unpredictable settings remains a significant hurdle.

The use of VR for teleoperation acts as a temporary fix, letting human reasoning step in when AI still can’t keep pace. Generally, this means live motion is transmitted from the operator’s headset and controller to the robot’s actuation system, where managing latency and signal integrity becomes vital. Yet sticking to such teleoperation approaches while branding the event around “autonomy” adds a layer of confusion about where true AI capability lies versus a staged performance looking good for show. For folks keen on robotics, this incident stirs up concerns about clarity during public demos and raises doubts about the sophistication of Tesla’s control mechanisms.

With Musk’s grand vision for Optimus looking toward mass production and self-operating manufacturing lines, he predicts hitting 5,000 units by late 2025, with future prices ranging from $20,000 to $30,000. He claimed the humanoid initiative could become “the biggest product of any kind ever,” contributing an astounding 80% to Tesla’s future value. But as the Miami event revealed, if something as simple as handing out water bottles still necessitates direct teleoperation, there’s a long way to go.

This incident has transformed into a viral case study illuminating the clash of hype, tech realities, and how the public perceives these advancements. It’s fascinating how a single gesture—a robot’s feigned headset removal—can unravel months worth of advertised autonomy, spotlighting the underlying human factor steering control. For a company that’s looking to lead in AI robotics, the new challenge isn’t just about teaching machines to ambulate, balance, and interact; it’s also about demonstrating they can master these tasks without a human guiding each step.